Tackling big data challenges for next-generation experiments

22 Feb 2021 01:50 PM

Scientists are developing vital software to exploit the large data sets collected by the next-generation experiments in high energy physics (HEP).

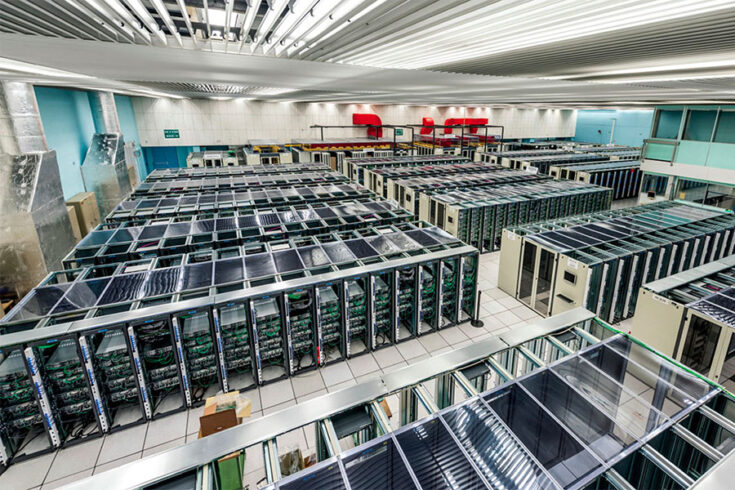

A view of the vast computing centre on CERN’s main site (credit: S Bennett/CERN

The experiments are predominantly those at the Large Hadron Collider (LHC).

Over the years, the existing code has struggled to meet rising output levels from large-scale experiments.

The new and optimised software will have the capability to crunch the masses of data that the LHC at CERN and next-generation neutrino experiments will produce this decade, such as the:

This is the first time a team of UK researchers have been funded to develop a software-based project by the Science and Technology Facilities Council (STFC).

Processing big data

Professor Davide Costanzo, the Principal Investigator based at the University of Sheffield, said:

Modern particle physics experiments are capable of producing an exabyte of real and simulated data every year.

Modern software tools are needed to process the data and produce the physics results and discoveries that we, particle physicists, are so proud of. This is central to the exploitation of particle physics experiments in the decades to come.

If scientists used everyday computers to store one exabyte of data, they would need almost one million powerful home computers to do it.

A greener software

Without a software upgrade, the need for computing resources for the LHC would be expected to grow six times in size in the next decade.

This is not only too expensive in hardware costs and software inefficiencies, but would also imply increased electricity use.

The more efficient software the team will develop will help reduce the usage of computing resources and the carbon footprint of data centres across the world.

The project is to ensure scientists can exploit the physics capabilities of future HEP experiments while keeping computing costs affordable.

Creating this novel solution will lead to the development of skills transferable to the overall UK economy.

Solving the big challenge

Conor Fitzpatrick, the Deputy Principal Investigator and UKRI Future Leaders Fellow based at the University of Manchester, said:

The data rates we expect from the LHC upgrades and future experimental infrastructure represent an enormous challenge: if we are to maximally exploit these state-of-the-art machines, we need to develop new and cost-effective ways to collect and process the data they will generate.

This challenge is by no means unique to scientific infrastructure. Similar issues are being faced by industry and society as we become increasingly reliant on real-time analysis of big data in our everyday lives.

It is very encouraging that STFC is supporting this effort, which brings together and enhances the significant software expertise present in the UK to address these challenges.

The UK’s involvement

The large upgrade will be organised into a set of activities that the UK has leading expertise and experience within – involving work on:

- data management

- event generation

- simulation

- reconstruction

- data analysis.

The partners involved in the upgrade include UK universities and STFC’s Rutherford Appleton Laboratory (RAL) and in cooperation with CERN and other international stakeholders.

Staff at RAL are involved in the data management work package and in the reconstruction work package, developing tools for the tracking of LHC experiments.

The team aim to engage the community in the development of the software needed by particle physics experiments, with workshops planned twice every year.

Important milestone for 2024

The project’s important milestone for 2024 is to evolve from proof-of-concept studies to deployment, ready for the start of data-taking at the High Luminosity-LHC and next-generation neutrino experiments.

These are expected to dominate the particle physics scene in the second half of the decade.

Further information

The UK universities involved are:

- University of Birmingham

- University of Bristol

- Brunel University

- University of Cambridge

- Durham University

- The University of Edinburgh

- University of Glasgow

- Lancaster University

- The University of Liverpool

- Imperial College London

- King’s College London

- University College London (UCL)

- Royal Holloway, University of London

- Queen Mary, University of London

- The University of Manchester

- University of Oxford

- The University of Sheffield

- University of Sussex

- University of Warwick.