Ofcom

|

|

Encountering violent online content starts at primary school

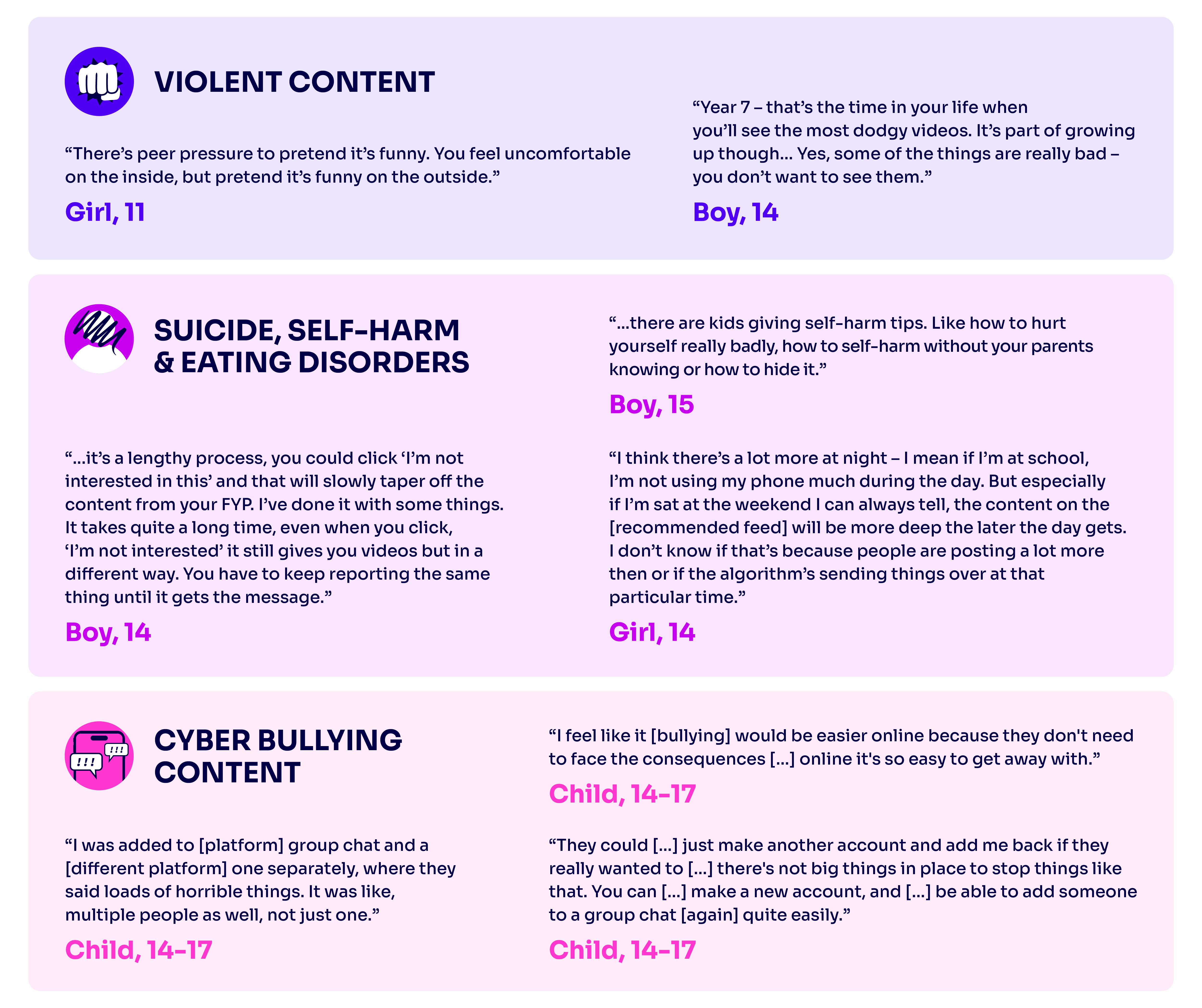

Children first see violent online content while still at primary school and describe it as an inevitable part of being online, according to new research commissioned by Ofcom.

- Children describe violent content as ‘unavoidable’ online

- Teenage boys more likely to share violent material to ‘fit in’ with peers

- Recommender algorithms and group messaging fuel exposure

- Children’s willingness to report harmful content undermined by lack of trust in process

All children who took part in the research came across violent content online, mostly via social media, video-sharing and messaging sites and apps. Many tell us this is before they have reached the minimum age requirement to use these services.

Violent 18+ gaming content, verbal discrimination and fighting content are commonly encountered by the children we spoke to. Sharing videos of local school and street fights has also become normalised for many of the children. For some, this is because of a desire to build online status among their peers and followers. For others, it is to protect themselves from being labelled as ‘different’ for not taking part.

Some children mention seeing extreme graphic violence, including gang-related content, albeit much less frequently. And while they’re aware that the most extreme violent material can be found on the dark web, none of the children we spoke to had accessed this themselves.

Teenage boys seek online kudos

The teenage boys we spoke to were the most likely to actively share and seek out violent content. This is often motivated by a desire to ‘fit in’ and gain popularity, due the high levels of engagement this content attracts. Some 10–14-year-olds in the groups describe feeling pressure not only to watch violent content, but to find it ‘funny’, fearing isolation from their peers if they don’t. Older children appear to be more desensitised to violent content, and are less likely to share it.

Most of the children say they encounter violent content unintentionally via large group chats, posts from strangers on their newsfeeds, or through recommender systems which they refer to as ‘the algorithm’.

Many feel they have no control over the violent content suggested to them, or how to prevent it. This can make them feel upset, anxious, guilty – particularly given they rarely report it – and even fearful. The adult professionals we spoke to also observe that cumulative exposure to violent content contributes to some children becoming socially and physically withdrawn in the offline world.

Understanding pathways to serious harm

Today’s report, carried out by Family, Kids and Youth, is one in a series of research studies to build Ofcom’s understanding of children’s pathways to harm online. A second report published today, examines children’s experiences of content relating to suicide, self-harm and eating disorders. A third looks at children’s experiences of cyberbullying.

The second study, conducted on Ofcom’s behalf by Ipsos UK and TONIC, reveals that children and young people who have encountered content relating to suicide, self-harm and eating disorders have a strong familiarity with such content. They characterise it as prolific on social media and feel frequent exposure contributes to a collective normalisation and often desensitisation to the gravity of these issues.

Children and young people tend to initially see this content unintentionally through personalised recommendations on their social media feeds, with the perception that algorithms then increase its volume. Those children and young people with lived experience of these issues, tell us they are also more proactive and intentional in seeking out this content - through the use of adapted hashtags and community codewords, for example. Some say they experienced a worsening of their symptoms after first viewing this content online, while others discovered previously unknown self-harm techniques.

There are also mixed views among the children and young people about what they consider to be ‘recovery’ content. They tell us that content can be deliberately tagged as such to attract followers – or to avoid detection and content moderation - but can be, in fact, more explicitly harmful.

A third research study conducted on Ofcom’s behalf by the National Centre for Social Research in partnership with City University - and supported by the Anti-Bullying Alliance and The Diana Award – reveals that cyberbullying happens anywhere children interact online, with wide-ranging negative impacts on their emotional wellbeing and mental and physical health.

Children tell us that direct messaging and comment functionalities are fundamental enablers of cyberbullying, with some saying they had been targeted in group-chats they had been added to without giving permission. They also feel that the ease of setting up anonymous, fake, or multiple accounts means cyberbullying can take place with limited consequences.

Lack of trust and confidence in reporting process

A recurring theme in today’s studies is children’s lack of trust and confidence in the flagging and reporting process.

They worry, for example, that dwelling on harmful content in order to report it could lead to being recommended more of the same, so most choose to scroll past it instead. Other children describe their experience of reports getting lost in the system, and only receiving generic messages in reply to their report.

They also express concerns about the complexity of reporting processes, how approaches vary widely between platforms and feared a lack of anonymity.

Protecting children from harm under the Online Safety Act

The children who took part in our studies want to see tech firms taking responsibility for keeping them safer online. And we’re clear that social media, messaging and video-sharing services must ensure they’re ready to fulfil their new duties under the Online Safety Act.

Specifically, user-to-user services must put in place specific measures designed to prevent children from encountering ‘Primary Priority Content’ - pornography and material which encourages, promotes or provides instructions for suicide, deliberate self-harm, and eating disorders. They must also protect children from other ‘Priority’ harms (including violent content and cyberbullying).

Today’s research studies are an integral part of our broad evidence base to inform our draft Protection of Children Codes of Practice, due to be published for consultation later in spring. These Codes will set out the recommended practical steps tech firms can take to meet their requirements to protect children under the new laws.

During the consultation process, we’ll engage with industry, civil society organisations involved in protecting children’s interests, as well as hearing from children directly - through a series of focused discussions - to inform our final decisions.

Ofcom will also shortly be consulting on its three-year strategy on promoting and supporting media literacy in the UK. Developing strong media literacy skills can help children engage with online services critically, safely and effectively. A report for Ofcom by Magenta on highly media literate children aged 8-12, published today, describes the steps highly media literate children take - in the absence of effective protections on online services - to reduce the risk of encountering harmful material, and how they make informed decisions about what they do online.

"Children should not feel that seriously harmful content - including material depicting violence or promoting self-injury - is an inevitable or unavoidable part of their lives online. Today’s research sends a powerful message to tech firms that now is the time to act so they’re ready to meet their child protection duties under new online safety laws. Later this spring, we’ll consult on how we expect the industry to make sure that children can enjoy an age-appropriate, safer online experience.”

Gill Whitehead, Ofcom’s Online Safety Group Director

Original article link: https://www.ofcom.org.uk/news-centre/2024/encountering-violent-online-content-starts-at-primary-school